This How-to applies to:

Article is based on version 12.00 but older versions should work as well.

Objectives with this article

Allocating three public IPv4 addresses to be used on an HA cluster is not always possible and it is not a must, depending on requirements.

The objective of this article is to configure an existing HA cluster that only has one public IPv4 address with the option to still manage the firewall from the outside (Internet) by using the Shared IP to be forwarded to the current active cluster node.

2.Table of content:

- HA installation schematic.

- The solution using an SLB rule.

- Configuring the SLB rule.

- Changing the needed HA advanced setting.

- Verification.

- Addendum: Accessing both nodes at the same time.

- Question: Will this work towards InControl?

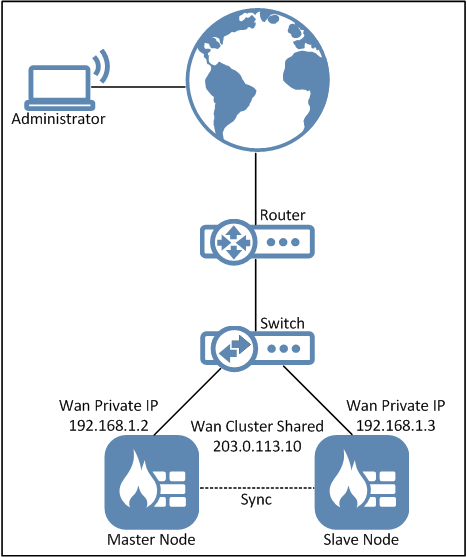

3. HA installation schematic

3.1. The solution

There are several problems that must be solved in order to get this to work. Luckily we can use a Server Load Balancing (SLB) IP Policy to solve the biggest problem, which is to implement a system that forwards the management packets to the currently active cluster node. Since the active cluster node can change, we need a system that can monitor and check who the active cluster node is and only send the request to the node that is currently active.

The SLB feature is a function where you can configure a static address translation (SAT) but instead of forwarding the traffic to one specific server we can send it to multiple servers based on a load distribution algorithm of your choice. The load distribution is not relevant in this scenario but rather the SLB policy’s option/ability to monitor the servers that are part of its distribution pool.

When you create an HA cluster you must create and use an HA object for all interfaces, this HA object contains the IP addresses that the Master and Slave cluster nodes can use for the assigned interface. Meaning the object does not contain one but two IP addresses. Not all interfaces need to be configured with valid IPv4HA addresses (e.g. no 127.x.x.x addresses) as it’s mainly used for management, log generation, SMTP polling and such but for this scenario we must make sure that the external (WAN) interface has 3 IP addresses (see schematic above).

Since we only have (or want to use) one public IP address, we give the Master and Slave an IP in a private range (192.168.1.2 and 192.168.1.3)

3.2. Configuring the SLB policy

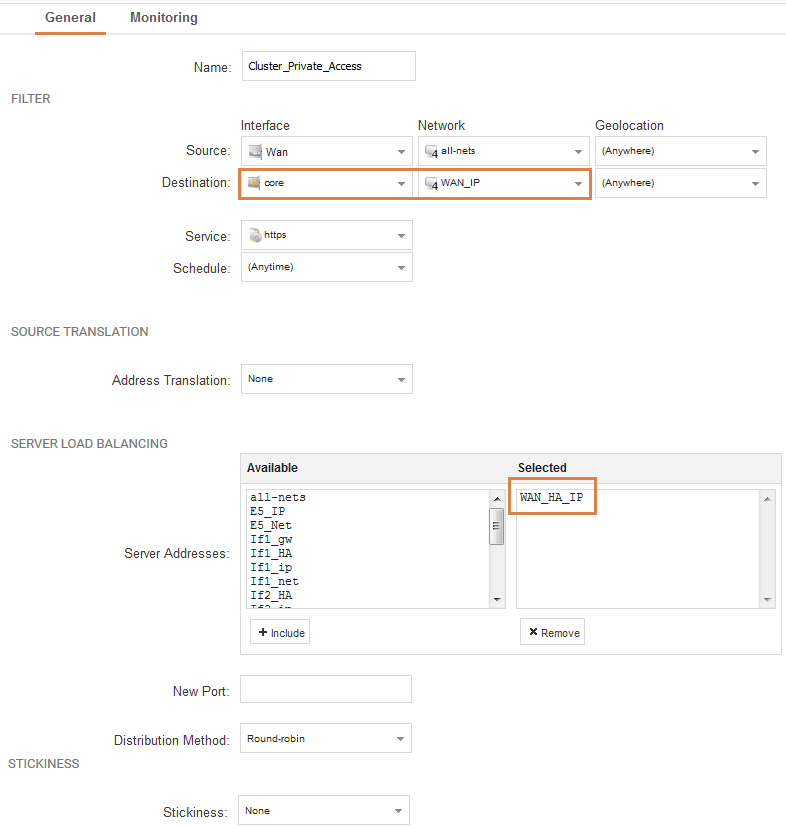

Our example SLB rule is configured with the following parameters:

The destination network (WAN_IP) in this case is the single-public shared IP of the cluster where we expect incoming packets for management purposes.

Note: If you want to restrict management from a specific source IP(s) or network, it is recommended to set something in the source network. Assuming that the management client host either has a static IP or an FQDN/DNS name that can be used here. Locking down access to the cluster management port(s) is recommended.

The service is HTTPS as we want to reach the active cluster node’s WebUI interface.

The interesting part now is the object selected for the Server Load Balancing. The object in the picture called “WAN_HA_IP” is the cluster object that contains the two IP addresses used by the cluster as it’s private IP (192.168.1.2 and 192.168.1.3). By using this object the server load balancing feature will attempt to balance between these two IP addresses. But since you cannot access the inactive node from the active node, only the active node will respond to the IP if it is the active node.

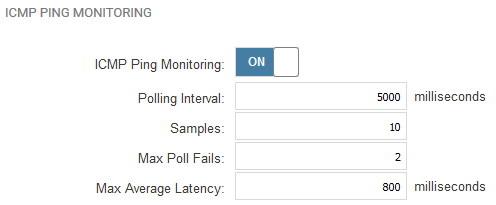

We can use this by enabling monitoring on our SLB rule as shown below.

We enable a simple ICMP monitoring without any special settings. The only IP that will respond is the private IP of the active cluster node, so the SLB load balancing will only send packets to one IP as the other IP will fail the monitoring and be declared as unavailable.

Note-1: An IP Policy to allow Core pings is not needed at the monitoring is initiated from the Core itself.

Note-2: TCP monitoring is not working as the Firewall will drop it due to “Local_Undelivered”, it is a possible design limitation as this how-to is basically describing a workaround to a scenario that is not designed to work natively :)

3.3. Changing the needed HA advanced setting

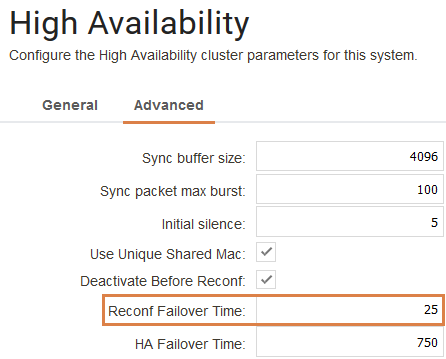

The last thing that needs to be done is to change one of the advanced settings related to HA cluster. This setting can be found under System->High Availability->Advanced and is called “Reconf Failover Time”.

The reason why we must set a value on this setting is because if the cluster performs a role-change, it would not be possible to deploy a configuration.

This setting makes an HA cluster behave a bit lite a stand-alone unit. The value you set here is in seconds and it is basically how long the inactive node would wait for the current active node before it decides it is dead and takes over. The exact functionality of the setting is more complex than that in the background, but that is roughly what the setting does. In the example we set it to 25 seconds which is a very high value. Usually between 5-10 seconds is normal.

The value to set is basically how long the HA cluster takes as it longest to deploy a configuration assuming it also does a database update of e.g. IDP and/or Anti-Virus. The exact time it takes to deploy a configuration can be shown if you use the “reconfigure –timings” CLI command. Take the longest value then add a couple of seconds to that.

The biggest drawback with using this setting is the “hiccup” that usually occurs when the cluster reloads the configuration. Without the setting activated the HA cluster makes an immediate fail-over and users would probably not notice anything at all.

Depending on the speed of the hardware, the CPU load etc. you can expect at least a packet with high latency and/or one (worst case two) packet loss.

If the cluster does not make a role change, it means that when you deploy the configuration on e.g. the active Master node, it will attempt to verify the configuration di-direction with the still active Master after the reconfigure is done.

If the setting was not enabled, in the above example, it means that you will end up on the active Slave node that does NOT have the new configuration you attempted to deploy, and the now inactive Master would fail the 30 second bi-direction verification.

Verification

The most obvious way to test would of course be to attempt to make a configuration deployment. But in case of problems there are a few CLI commands that can be used to verify that things are looking as they should and if not, hopefully have a starting point for the troubleshooting.

There are three commands that can be run on each cluster nodes to verify that they contain the expected output. “HA”, “SLB” and “routemonitor”.

From the active Master:

VSG-23:/> ha

This device is a HA MASTER This device is currently ACTIVE (will forward traffic) This device has been active: 431169 sec HA cluster peer is ALIVE

VSG-23:/> slb

Policy Server Online Maint Conn Load

---------------- --------------- ------- ------ ----- -----

Cluster_Private_Access

192.168.1.2 Yes No - -

VSG-23:/> hostmon

Monitor sessions:

Session #1:

Failed Latency ms

Reachable Proto Host Port Interval Sample (act / max) (act / max)

--------- ----- --------------- ---- -------- ------ ----------- -----------

Session #2:

Failed Latency ms

Reachable Proto Host Port Interval Sample (act / max) (act / max)

--------- ----- --------------- ---- -------- ------ ----------- -----------

YES ICMP 192.168.1.2 5000 10 0 / 2 0 / 800

From the inactive slave:

VSG-24:/> ha

This device is a HA SLAVE This device is currently INACTIVE (won't forward traffic) This device has been inactive: 431180 sec HA cluster peer is ALIVE

VSG-24:/> slb

Policy Server Online Maint Conn Load

---------------- --------------- ------- ------ ----- -----

Cluster_Private_Access

192.168.1.3 Yes No - -

VSG-24:/> hostmon

Monitor sessions:

Session #1:

Failed Latency ms

Reachable Proto Host Port Interval Sample (act / max) (act / max)

--------- ----- --------------- ---- -------- ------ ----------- -----------

Session #2:

Failed Latency ms

Reachable Proto Host Port Interval Sample (act / max) (act / max)

--------- ----- --------------- ---- -------- ------ ----------- -----------

YES ICMP 192.168.1.3 5000 10 0 / 2 0 / 800

- The HA command is run to verify which node that is currently active.

2. The SLB command is run to verify the status of the SLB server that is in the distribution “pool”.

3. The Hostmonitor command is run to get more details about the monitoring. SLB is basically “borrowing” functionality from Hostmonitor and we can use this command to get a little more details about the status of the monitoring.

As we can see from the above output the cluster nodes can only see themselves in the SLB and Hostmonitor output. They can only see themselves due to how HA clusters is designed, basically that you cannot access the inactive node from the active and vice versa.

Addendum: Accessing both nodes at the same time

A very brief description without too much details about this concept:

If you want to access both nodes at the same time using same Shared IP, but different ports (e.g. 881 for Master; 882 for Slave) you need 2-crossed NAT/SAT policies, which use the Master Private IP to access the Slave Private IP, and the other way around. It also requires 2 static ARPs on for private addresses, otherwise ARP resolution fails.

Question: Will this work towards InControl?

Answer: The base scenario will not work towards InControl, but only for WebUI / SSH. Using the concept of accessing both nodes at the same time using different ports “may” work but it is a scenario/concept that has not been tested or verified by Clavister.

Related articles

5 Feb, 2021 incontrol howto backup windows