Objectives with this article:

The aim here is to introduce the concept of FTP and FTPS, and best practices for allowing access between clients and servers through a firewall.

The convenience of file transfer, and the inconvenience of allowing access:

FTP has for a long time been one of the most common ways for transferring files between remote locations. It was originally intended to be a standardised means of ensuring file transfers occur with little risk of data corruption by relying on the TCP stack, which provides a means of checking how packets arrive between client and server.

This is typically achieved by initiating communication on a control channel (commonly 21), and then setting up data channels over either set or random ports - usually port 20 when determined, or between 10000-20000 when random. Unfortunately, for the sake of convenience, many vendors have opted to use a random port range, which presents a problem for firewalls which would have to open up all possible ports for a given client. The more clients involved, the more potential ports need to be opened up. To solve this preference towards convenience, a solution firewall vendors have come up with is to use Application Layer Gateways (ALG) to track and assist connections that originate on one port, and then setup additional ports.

Later, as the rising need for security was considered, FTPS (or encrypted FTP) was developed in order to prevent interception or sniffing of data between client and server. However, for the most part FTPS still operates much like FTP, in that a control channel (port 21) and set of data channels (often random) are required.

To address this issue, SFTP was introduced, which handles all communication for control and data channels over port 21, which allows for both security through encryption, and predictable port assignment to make it easier for firewalls to manage.

In the scenarios above, while FTP port usage has been assisted with ALGs, so that only one port is required to be open (and the ALG tracks the rest dynamically), FTPS isn’t able to function with ALGs due to its use of encryption. So, while access can be granted to FTP with a single port and using ALG, it’s still unsecure as it’s sent over plaintext. Whereas FTPS is secure as it’s encrypted, but ports can’t be tracked so many have to be opened.

So, these are 3 main implementations of the same file transfer protocol, each with their own advantages. While not discussed here in detail, active and passive communication is also a factor to consider, which determines whether client or server choose what ports to use for setting up data channels. In any case, the aim here is to present the most secure approach of these choices for use with a firewall.

Which technology to choose?:

While we can’t recommend a specific software vendor to use, we can recommend one which offers certain features. If security is a concern and FTP is to be used with a firewall, it could be considered that FTPS or SFTP are the most relevant by today’s standards, as sending anything in plaintext over the internet opens up such a big risk for attack and interception.

Then, a worthy consideration would be to go against the common value of convenience over security, and instead choose security over convenience. While most FTP vendors make setup relatively simple by requiring only a valid address and user credentials to connect, other vendors allow for more advanced configuration of how communication takes place. We would recommend choosing software that allows data channels to be configured, which will allow for much tighter integration into firewalls.

For instance, instead of having to open up ports 10000-20000, you could instead choose FTPS software which can be configured to use a much smaller range of ports for data channel. One example would be to setup only 10 ports for data channels per user, which should in most scenarios be enough for sending/receiving files. So, if you have around 10 users connecting to a single server, you could open up 100 ports between 10000-10100, resulting in a much smaller attack surface and tighter control over what is allowed.

Best practices for configuring FTP:

As discussed, FTPS (or even SFTP) would be the preferred means of file transfer. As we can’t inspect encrypted traffic, we don’t currently have an ALG that can handle random port assignment, which is why all available ports need to be opened for FTP communication. For this reason, choosing a vendor which allows configuration of data channel ports would be desirable. Using this requirement as an example, our firewall can be configured in the following way, based on this use case:

Number of Users: 10

Protocol: FTPS

Control Channel: Port 21

Data Channels: 10000-10100

This will allow 10 data channels per user, which should provide enough throughput for most file transfer scenarios.

Firewall configuration:

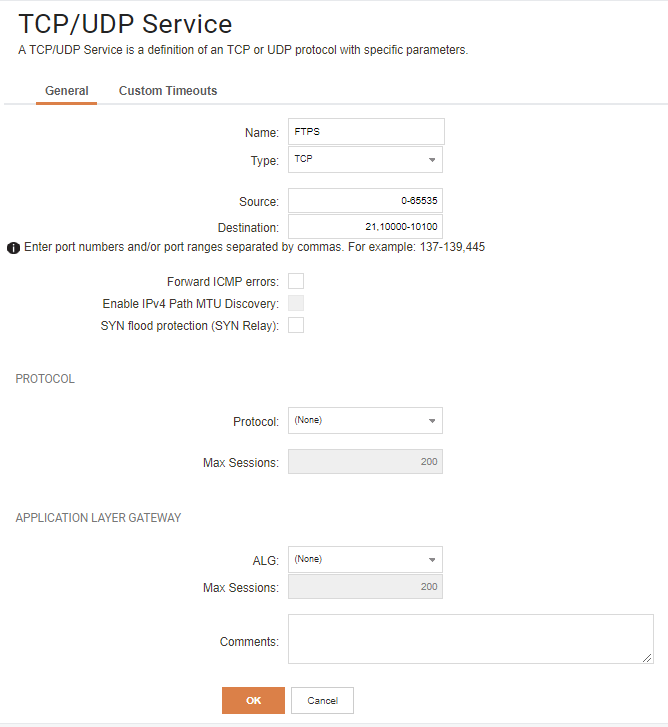

Based on the above requirements, we’d need to setup a service in the following way:

1: Configure a service which allows access to control and data channel ports as follows.

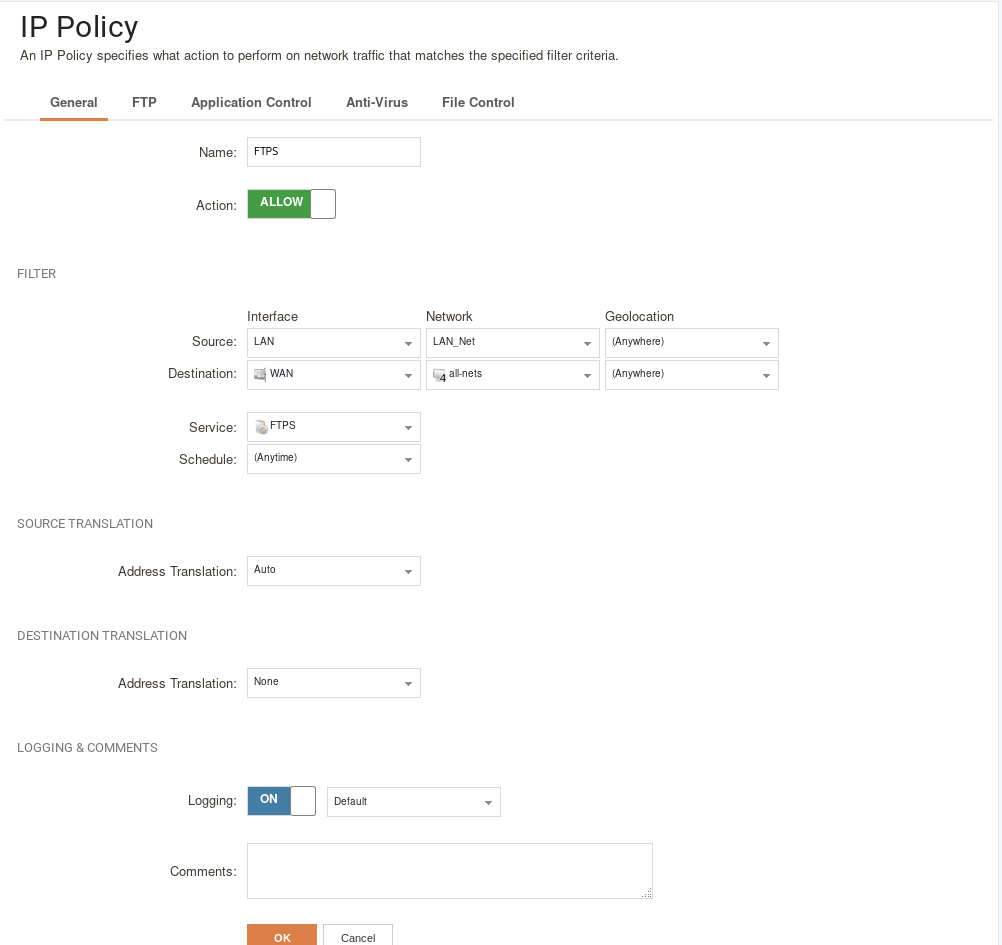

2: Create an IP Policy which uses this service from and toward desired locations.

This should reasonably be enough to allow communication between an FTPS server which has set data channel ports. Because FTPS is encrypted, it should be relatively safe to allow access over the internet. However, to further secure this setup, we could also use Application Control in order to assure all communication obeys normal FTPS behaviour. This can be setup as follows:

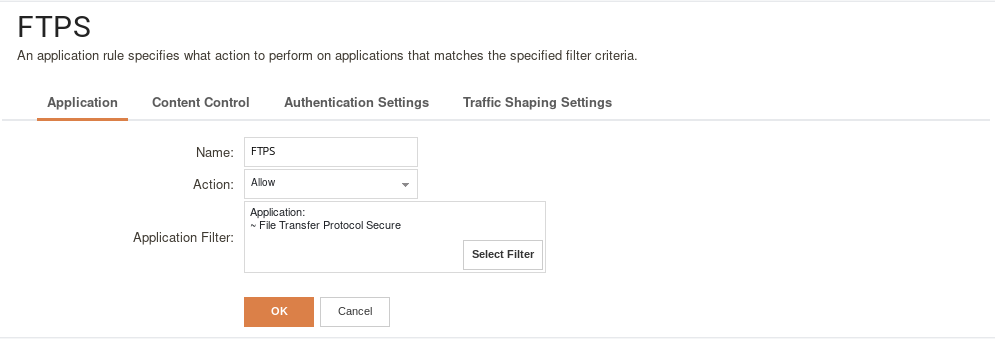

3: Create an Application Control Profile which uses FTPS as an application filter:

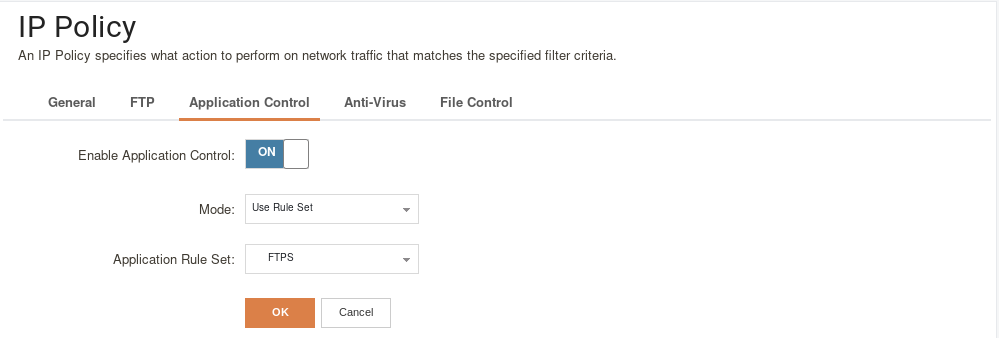

4: Modify IP Policy to use Application Control profile:

With this setup, we should now have a safe means of sending files over the internet, while keeping the attack surface on the firewall as small as possible. By choosing FTPS, data integrity is also provided. While this may be a bit more work compared to using software which uses random ports, the work involved should be minimal, and much tighter control can be maintained.

Related articles

No related articles found.